Last week I asked 50 readers about their biggest AI frustrations. The pattern was clear: most of you are experimenting with AI tools, but struggling to create reliable systems that actually save time rather than creating more work. Today, I'm sharing my personal "AI Command Center" framework that helped me reclaim 5+ hours weekly.

The Delegation Paradox

Why most AI workflows fail: The hidden time cost of inconsistent prompting

Most people approach AI tools with a haphazard "let's see what happens" mentality. You type different versions of the same request, get inconsistent results, and end up spending more time fixing outputs than you would have spent doing the task yourself.

Sound familiar?

Here's the problem: inconsistent inputs guarantee inconsistent outputs. When you change how you prompt AI tools each time, you're essentially training a new assistant for every task. It's like hiring someone new every day without providing documentation or training.

The hidden cost? Not just the time spent rewriting prompts, but the mental bandwidth consumed by constant experimentation.

The truth about AI reliability: Why 80% accuracy requires a different approach

The breakthrough moment in my AI journey came when I accepted a simple truth: current AI tools operate at about 80% reliability. They're extraordinary, but not perfect.

The typical response to this reality is either:

Perfectionist paralysis: "If it's not 100% reliable, I can't trust it"

Blind delegation: "AI will figure it out" (followed by disappointment)

Neither approach works. The key is designing systems that leverage the 80% while efficiently managing the 20% gap.

This means:

Identifying tasks where 80% accuracy is sufficient

Creating guardrails for catching the 20% errors

Developing efficient human-AI collaboration protocols for complex tasks

My personal journey: From early experiments to building a sustainable system

As an early AI adopter, I've been experimenting with these tools for over three years now - long before the current wave of enthusiasm. Those early days were characterized by promising but inconsistent results: brilliant outputs followed by baffling misses.

The real breakthrough came when I shifted from viewing AI as a collection of tools to conceptualizing it as a system that needed proper architecture.

After tracking my results across different approaches, I discovered something crucial: tasks where I built consistent systems around AI usage showed measurable time savings and quality improvements. Tasks where I used AI ad-hoc - even with years of experience - showed minimal or negative ROI.

The difference wasn't the AI technology itself – which has certainly improved dramatically – but rather how I organized my interactions with it. By applying systems thinking to my AI workflows, I've transformed occasional wins into consistent leverage, reclaiming those 5+ hours weekly that I mentioned earlier.

This discovery fundamentally changed how I approach human-AI collaboration, moving from isolated experiments to an integrated command center approach.

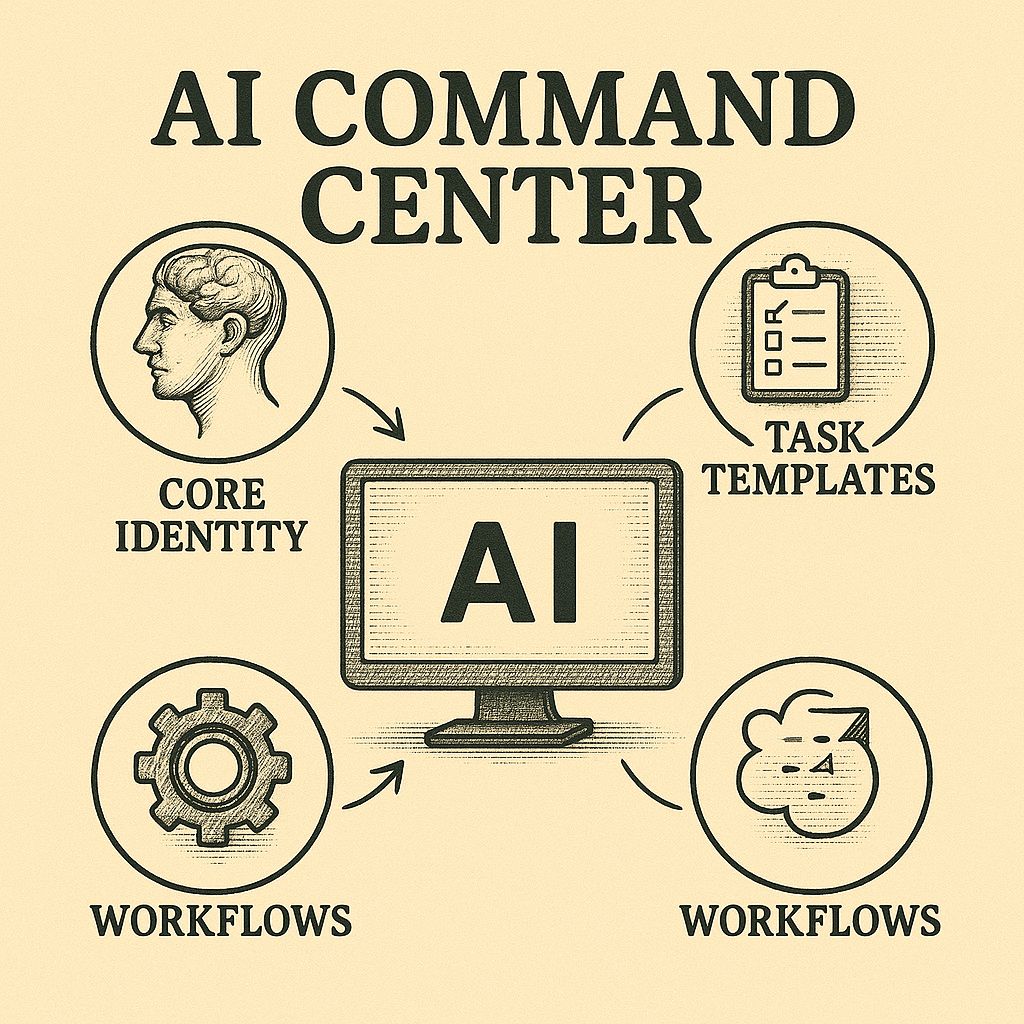

The Command Center Framework

Setting up your 3-tier prompt library (with examples)

The foundation of an effective AI Command Center is a well-organized prompt library. Think of this as your standard operating procedures – the consistent instructions that ensure reliable outputs.

I organize mine in three tiers:

Tier 1: Core Identity Prompts

These define how the AI should "think" and approach problems. They're like setting the operating philosophy:

You are my research assistant with expertise in systems thinking and complex problem analysis.

When analyzing information, prioritize identifying:

1) Underlying patterns and relationships

2) Potential second-order effects

3) Competing hypotheses that explain the dataAlways note gaps in available information and avoid overconfident assertions when data is limited.Tier 2: Task Templates

These are reusable frameworks for common tasks, with clear input/output specifications:

TASK: Email SummarizationINPUT: [Paste email thread]

INSTRUCTIONS:

1. Identify key participants in the conversation

2. Extract main discussion points

3. Highlight any decisions made or action items (with owner and deadline if specified)

4. Note any questions requiring my response

5. Flag any potential concerns or time-sensitive matters

OUTPUT STRUCTURE:

- PARTICIPANTS: [List names and roles]

- SUMMARY: [3-5 sentence overview]

- DECISIONS: [Bullet list]

- ACTION ITEMS: [Bullet list with owners and deadlines]

- REQUIRED RESPONSES: [Questions needing my input]

- CONCERNS: [Time-sensitive or potential problem areas]Tier 3 Example: Research Report Creation Workflow

WORKFLOW: Competitive Analysis Report Creation

STEP 1: Data Collection[PROMPT: You are my research assistant. I need information on competitor {COMPANY NAME}. Search for their key products/services, recent announcements, market positioning, pricing strategy, and customer sentiment. Format your findings as structured notes with links to sources.]

STEP 2: Gap Analysis[PROMPT: Review the competitive data collected in Step 1 and identify:

1. Areas where the competitor has advantages over us

2. Areas where we have advantages over them

3. Unaddressed market opportunities neither company is fully exploiting

4. Potential threats from their recent strategic movesFormat as a structured comparison with brief explanations for each point.]

STEP 3: Strategic Recommendations[PROMPT: Based on the competitive analysis and gap analysis, generate 3-5 actionable recommendations that would strengthen our market position against {COMPANY NAME}.

For each recommendation, include:- Brief description of the recommendation- Expected business impact- Estimated implementation difficulty (low/medium/high)- Timeframe for results (immediate/short-term/long-term)]

HANDOFF POINT: After Step 3, review recommendations and select which to incorporate into the final report, adding specific context about internal capabilities and resources.

FEEDBACK LOOP: Document which recommendations proved most valuable for refining future competitive analyses.This workflow helps professionals in any industry conduct thorough competitive research without starting from scratch each time, while preserving human judgment for the most strategic decisions.

Creating clear handoff points between AI and human work

The secret to effective AI delegation isn't removing humans – it's defining precisely where human judgment adds the most value.

I identify three types of handoff points:

Quality checkpoints: Brief reviews where humans verify AI output before proceeding

Example: Scanning a research summary for factual errors before incorporating into analysis

Judgment junctions: Decisions requiring values, taste, or nuanced understanding

Example: Selecting which of several AI-generated headlines best fits your brand voice

Refinement loops: Areas where iteration between human and AI creates superior results

Example: Human edits to AI-generated draft, followed by AI polish of human edits

For each workflow in my Command Center, I explicitly document:

What specific elements humans should verify

What success criteria to apply

How to provide feedback to improve future iterations

Not defining these handoff points is where most AI systems fail – either through over-delegation (letting AI do things it shouldn't) or under-delegation (human intervention where unnecessary).

Implementing the 2-minute decision framework for email processing

One of my most effective Command Center applications is email processing – a task that consumed hours of my week.

Here's my 2-minute decision framework for email triage:

First Pass (30 seconds): Scan new emails, applying the following decision tree:

Urgent & important → Handle immediately

Important but not urgent → Delegate to AI for initial processing

Not important → Automated response or archive

AI Processing (happens automatically): For important non-urgent emails:

Summarize key points and requested actions

Draft response options (brief, detailed, or clarifying questions)

Identify any research needed before response

Second Pass (90 seconds per email): Review AI output and:

Select appropriate response option

Personalize as needed (adding warmth or specific context)

Schedule follow-up if required

Send or queue for later sending

This system reduced my email processing time from 90+ minutes to under 30 minutes daily, while improving response quality and consistency.

The key is that 2-minute constraint – it forces clarity about what truly requires human attention versus what can be delegated with appropriate guardrails.

Implementation Guide

Step-by-step setup of your personal AI command center

Here's how to build your Command Center in under an hour:

Inventory your tasks (15 minutes)

List all recurring tasks you perform weekly

Rate each on two scales: time consumed and cognitive difficulty

Identify the high-time, low-cognitive tasks as prime candidates for delegation

Select your tools (5 minutes)

Primary AI assistant (e.g., ChatGPT, Claude, Gemini)

Documentation system (e.g., Notion, Obsidian, Word)

Optional: specialized AI tools for specific domains

Create your prompt library foundation (20 minutes)

Draft 1-2 Core Identity Prompts that reflect your needs

Create 3-5 Task Templates for your most common activities

Document 1 Chain-of-Task Workflow for a complex process

Establish measurement baseline (15 minutes)

For each task you're delegating, document:

Current time to complete manually

Quality criteria for successful completion

Potential failure modes to monitor

Design feedback loop (5 minutes)

Create a simple system to track:

Time saved per task

Quality issues encountered

Prompt improvements made

Start small with 2-3 tasks, perfect those workflows, then gradually expand. The key is building confidence in your system before scaling.

The 15-minute daily check-in that keeps everything running

The sustainability of your AI Command Center depends on a consistent maintenance routine. I've found that 15 minutes daily is the sweet spot – enough for course correction without becoming burdensome.

Here's my daily check-in structure:

Morning (5 minutes):

Review yesterday's AI task outcomes

Note any quality issues or failures

Prioritize today's AI delegations

Evening (10 minutes):

Document time saved and quality of outputs

Make quick adjustments to problematic prompts

Identify one new task or improvement for tomorrow

What makes this system work is its sustainability. The 15-minute investment yields hours of reclaimed time while continuously improving your system.

I use a simple tracking template:

Tasks delegated today

Estimated time saved

Quality rating (1-5)

Issues encountered

Improvements implemented

This creates accountability and provides data to refine your system over time.

How to measure AI performance using the "3-dimension evaluation framework"

To truly optimize your Command Center, you need objective measurement beyond just "did it work?"

I evaluate AI performance across three dimensions:

Efficiency

Time saved compared to manual completion

Resources required (API costs, computing resources)

Learning curve for implementation

Quality

Accuracy of information

Clarity and coherence of output

Appropriateness for intended purpose

Consistency across multiple runs

Augmentation

Does it enhance my capabilities?

Does it enable new workflows impossible before?

Does it free mental bandwidth for higher-value activities?

For each task, I assign a 1-5 rating in each dimension, with specific criteria for each score. This creates a numerical "performance fingerprint" for different types of AI tasks, making it clear where your system excels and where it needs improvement.

The magic happens when you use this data to refine your prompts and processes, creating a continuously improving system.

Warning Signs & Guardrails

How to recognize when you're over-delegating

While building your Command Center, watch for these warning signs of over-delegation:

Accuracy drift: When error rates gradually increase as AI handles more complex versions of a task

Context collapse: When AI outputs become generic or miss important nuances specific to your situation

Validation overload: When checking AI work takes more time than doing it yourself

Skill atrophy: When you find your own abilities degrading in areas fully delegated to AI

Enjoyment inversion: When tasks you previously enjoyed become mechanical AI supervision chores

The solution isn't abandoning AI assistance but recalibrating your human-AI boundary. Some tasks benefit from collaboration rather than full delegation – where AI handles the mechanical aspects while you provide the judgment and creativity.

Establishing ethical guidelines for your AI usage

Your Command Center needs clear ethical guardrails. Here's my personal framework:

Transparency: I disclose when AI has contributed significantly to work I share

Verification: Factual claims generated by AI require human verification before sharing

Attribution: Ideas synthesized by AI from others' work receive proper credit

Responsibility: I remain accountable for all outputs from my AI systems

Authenticity: Personal communications maintain an appropriate human element

Beyond these principles, consider domain-specific guidelines. For example, I have additional rules for research (verification from multiple sources) and creative writing (AI assists structure but doesn't generate core ideas).

Document these guidelines in your Command Center and review them periodically as capabilities evolve.

The importance of maintaining critical thinking skills

Perhaps the most subtle risk of an effective AI Command Center is outsourcing your critical thinking. When systems work well, it's tempting to accept outputs without scrutiny.

To counter this tendency, I've implemented specific practices:

Regular "manual mode" days: One day weekly where I complete key tasks without AI assistance

Deliberate challenge sessions: Periodically questioning AI outputs even when they seem correct

First principles thinking: Regularly revisiting foundational assumptions in my work

Diverse input sources: Ensuring my information diet extends beyond AI-filtered content

Skill development inventory: Tracking which capabilities I'm actively building versus delegating

The goal isn't to limit AI usage but to ensure it amplifies rather than replaces your intellectual development.

Closing Thoughts

Instead of treating AI tools as random assistants, start thinking of them as part of your personal team. By implementing this command center approach, you're not just saving time—you're creating leverage that compounds.

What separates those truly benefiting from AI from those merely experimenting is simple: systems thinking. The tools themselves provide capability, but your frameworks determine whether that capability translates to real-world advantage.

Your AI Command Center will evolve as both the technology and your needs change. The key is building that initial systematic foundation – the 15-minute investment that yields 5+ hours of weekly return.

Next week, I'll show you how to extend this system to create an AI research assistant that generates insights, not just information. You'll learn how to transform raw data into actionable wisdom using the same systematic approach.

Until then, I'd love to hear: What's the one recurring task consuming your time that you'd most like to delegate to an AI system? Reply to this email and I'll share some specific strategies for your situation.

Always in your corner,

Marcel

The Thinking Edge